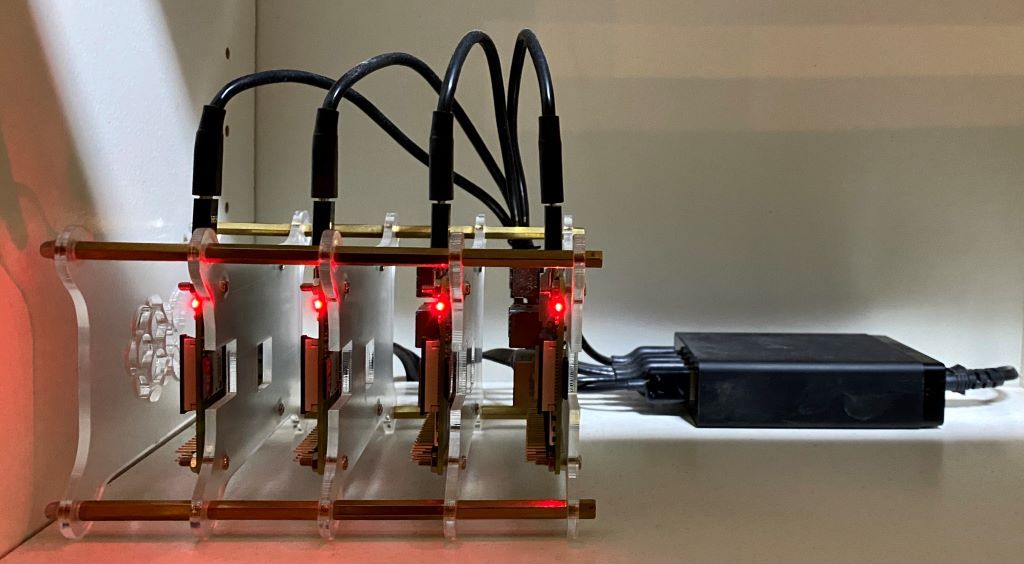

Creating a multi-arch K8 cluster - Part 3 - Install Docker and Kubernetes

The following steps have to be done on all Raspberry Pi's in the cluster!

Prepare Docker installation

Enable the linux kernel control groups (cgroups) for Docker and Kubernetes. These cgroups are responsible for isolation and limiting of resources like CPU and memory.

Therefor it's necessary to edit the boot firmware file. SSH into ever PI and edit the file:

ssh admin@<ip-address-of-the-pi>

sudo nano /boot/firmware/cmdline.txt

At the end of the first line append the following parameters:

cgroup_enable=cpuset cgroup_enable=memory cgroup_memory=1 swapaccount=1

It's also recommended to reduce the max. available graphics memory of the Raspberry Pi and give more free memory to the OS.

Edit the following file:

sudo nano /boot/firmware/syscfg.txt

Append the next line to end of the file, save and exit the editor:

gpu_mem=16

Install Docker

Next step is installing Docker and disabling automatic updates of the Docker packages. This is useful to prevent a too early update of the Docker services and problems of incompability between Docker and Kubernetes versions.

sudo apt-get install docker.io -y

sudo apt-mark hold docker.io

sudo systemctl enable docker.service

sudo rebootBy default Docker will not use systemd as cgroup driver on Ubuntu. To change that SSH into every PI again and configure the Docker daemon.

sudo service docker stop

sudo nano /etc/docker/daemon.json

Copy & paste the following content into the file, save and exit the editor:

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}Now start the Docker daemon and check if the configuration was successful:

sudo service docker start

sudo docker infoThe output of the docker info call should contain:

Cgroup Driver: systemd

If this is not shown in the output, check the created daemon.json file and restart the Docker daemon.

When all these steps are done, we have a bunch of Raspberry Pi's with a running Docker installation. Whats following are the magical steps to install Kubernetes - which are not so magical at all - because it's simply the Kubernetes installation guide.

The default Ubuntu server + Docker installation and the steps described here activated all necessary things for Kubernetes. Anyway it's recommended to do a few checks:

- Check if br_netfilter is installed (should show: br_netfilter)

sudo lsmod | grep br_netfilter

2. Check if iptables bridget traffic is enabled (should show: bridge-nf-call-iptables = 1)

sudo sysctl --all | grep bridge-nf-call-ip

Install Kubernetes

If these steps were successful, it's time to install the Kubernetes tools on all Raspberry Pi's:

sudo apt-get update && sudo apt-get install -y apt-transport-https curl

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

sudo apt-get update

sudo apt-get install -y kubelet kubeadm kubectl

sudo apt-mark hold kubelet kubeadm kubectlThe last command will also prevent auto updates of the Kubernetes tools. Upgrading the Kubernetes version on all nodes must be done manually node by node and step by step. That will be part of another blog post.

Reload the daemon and restart the kubelet:

sudo systemctl daemon-reload && sudo systemctl restart kubelet

In preparation for Network file system (NFS) in part 4 of this series it's recommended to install the common NFS packages for client support.

sudo apt-get install nfs-common

The next steps will initialize the first Raspberry PI as master control plane node and add the other PI's as worker nodes to the master. Be aware to execute these steps on the correct node!

Prepare the control plane (master)

For the control plane (master) node (first Rasperry Pi) only:

Pull all necessary docker images beforehand to check connectivity and prevent warnings during setup. Then initialize the control plane node.

sudo kubeadm config images pull

sudo kubeadm initMake the kubectl command working for the current user - admin:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/configPrepare cluster network

Prepare the cluster network layer - this guide will use Weave Net. I fully tested it with a multi-architecture cluster and it works reliable. Others may too but I haven't tested it yet.

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

Configure kube-apiserver

To allow ResourceQuotas and Limits for Namespaces the kube-apiserver configuration needs to be adapted. The kube-apiserver has a configMap in the kube-system namespace.

Edit this with:

kubectl edit configmap -n kube-system kubeadm-config

In the extraArgs: section add the following line:

enable-admission-plugins: NodeRestriction,NamespaceLifecycle,LimitRanger,ResourceQuota,PersistentVolumeClaimResize

For more details about Kubernetes admission controllers visit: https://kubernetes.io/docs/reference/access-authn-authz/admission-controllers/

Join worker nodes

After successful rebooting of the master control plane, the worker nodes can join the cluster. To do so, SSH into the master node again and generate a Kubernetes join-token first.

ssh admin@<ip-address-of-master-node>

sudo kubeadm token create --print-join-commandCopy the shown result that will look like:

kubeadm join x.x.x.x:6443 --token xxxxx --discovery-token-ca-cert-hash sha256:xxxxxx

Now SSH into all other Raspberry Pi's and execute the kubeadm join .. command. Don't forget to use sudo!

sudo kubeadm join x.x.x.x:6443 --token xxxxx --discovery-token-ca-cert-hash sha256:xxxxxx

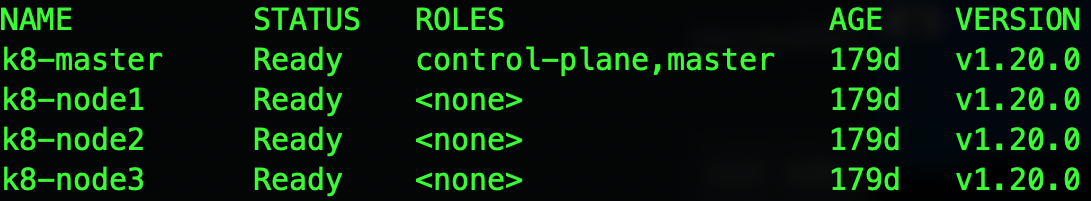

Back on the master node the following command will show if all nodes have sucessfully joined the cluster:

kubectl get nodes

The command should generate an output like this:

It could take several minutes until all nodes have pulled the necessary images and print a status Ready.

You can watch the progress with:

watch kubectl get nodes

Congratulations! - You have set up your own Kubernetes cluster.

To join more worker nodes to the cluster, repeat the Parts 1 - 3 of this blog post series and use kubeadm token create ... and kubeadm join ... at the end.

In Part 4 of the series we will install a software load balancer and setup NFS with dynamic volume provisioning.

Additional step to make life easier:

Install kubectl on your local environment to work from a remote terminal with Kubernetes tools.

The kubernetes docs. contain a detailed description of all necessary steps.

At the end copy the the content of the $HOME/.kube/config file from the admin user of the master node to the home folder (also ~/.kube/config) of your local workstation. Keep in mind that you work as administrator with maximum access rights in the cluster!

mkdir -p ~/.kube

scp admin@<ip-address-of-master-node>:/home/admin/.kube/config ~/.kube/config